Hey guys, today we're going to talk about concurrent programming in Python. Does this term give you a headache? Don't worry, after reading this article, you'll be able to easily grasp the concept and usage of concurrent programming. Let's start exploring together!

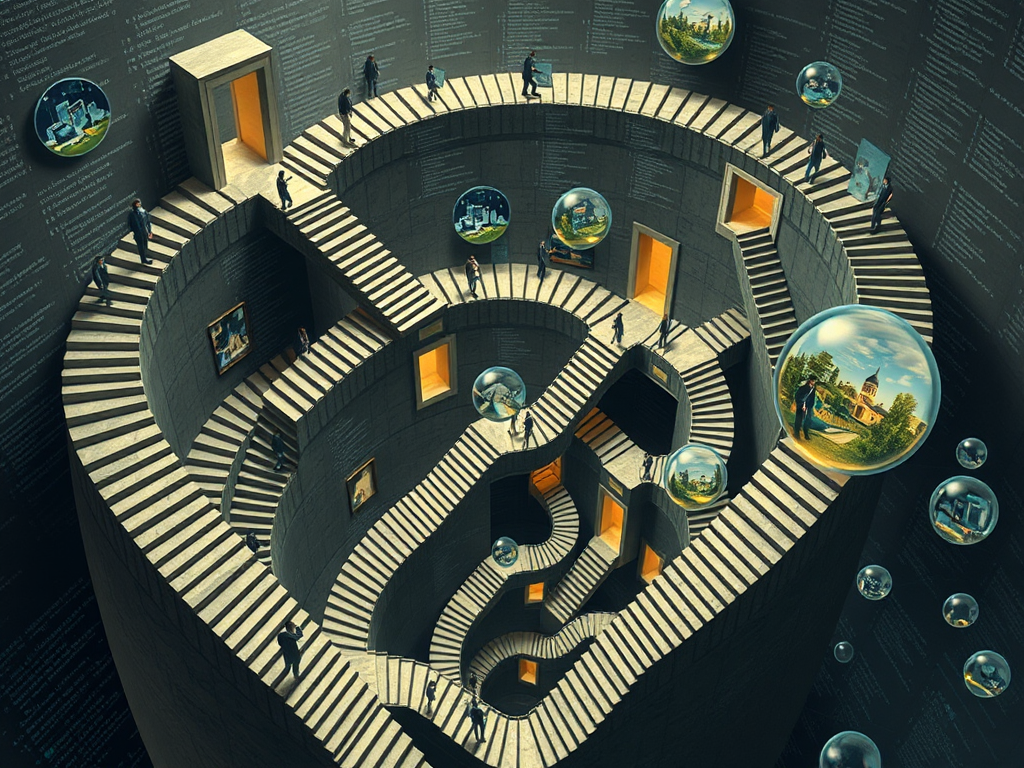

Why We Need Concurrent Programming

In single-threaded programs, tasks are executed one by one. When there's a time-consuming operation, the entire program has to wait for this operation to complete before continuing. It's like queuing up in a cafeteria, where you have to wait for the person in front of you to finish getting their food before it's your turn. If the person in front of you takes forever to pay, you have no choice but to wait boringly.

Concurrent programming is like opening multiple windows in the cafeteria, where everyone can get their food simultaneously without affecting each other. This way, even if someone is delayed while paying, it doesn't affect others from continuing to get their food. By properly utilizing multi-threading or multi-processing, we can fully leverage the CPU's computing power and improve the program's running efficiency.

Python Concurrent Programming Modules

There are mainly three modules for implementing concurrent programming in Python:

-

threading: Suitable for I/O-intensive tasks, such as network requests, file reading and writing, etc. However, be aware of the impact of GIL (Global Interpreter Lock), which may prevent performance improvements for CPU-intensive tasks in multi-threaded environments. -

multiprocessing: Suitable for CPU-intensive tasks, as each process has its own independent Python interpreter, unaffected by GIL. However, inter-process communication and context switching costs are relatively high. -

asyncio: An asynchronous programming module introduced in Python 3.4, which executes concurrent code through event loops, lighter than multi-threading.

Next, I'll introduce the basic usage of these three modules, and you can choose the appropriate module based on your needs.

concurrent.futures Module

The concurrent.futures module provides a higher-level concurrent interface that makes it easy to create thread pools or process pools. Let's feel its charm through an example:

import concurrent.futures

import requests

urls = [

'https://www.baidu.com',

'https://www.google.com',

'https://www.python.org'

]

def fetch(url):

response = requests.get(url)

return url, response.status_code

with concurrent.futures.ThreadPoolExecutor() as executor:

futures = [executor.submit(fetch, url) for url in urls]

for future in concurrent.futures.as_completed(futures):

url, status_code = future.result()

print(f'{url}: {status_code}')

This code creates a thread pool and concurrently sends HTTP requests to three URLs. The executor.submit method is used to submit a callable object, returning a Future object. We use the as_completed method to get completed Futures, and call their result method to get the final results.

You might ask, what if an exception occurs while executing the fetch function? Don't worry, let's see how to handle exceptions gracefully:

try:

url, status_code = future.result()

except Exception as e:

print(f'Error occurred: {e}')

else:

print(f'{url}: {status_code}')

By placing the result method in a try block, we can catch and handle exceptions. Isn't it simple?

asyncio Module

Now let's look at the asyncio module, which is the foundation for implementing asynchronous programming in Python. First, let's see a simple example:

import asyncio

async def foo():

await asyncio.sleep(1)

print('Foo done')

async def bar():

await asyncio.sleep(2)

print('Bar done')

async def main():

await asyncio.gather(foo(), bar())

asyncio.run(main())

In this code, foo and bar are coroutine functions, using the await keyword to pause the execution of coroutines. asyncio.gather can run multiple coroutines simultaneously and wait for all of them to complete.

You might ask, doesn't this look a bit strange? Don't worry, let's see how to write it in a more "Pythonic" way:

import asyncio

import aiohttp

async def fetch(url):

async with aiohttp.ClientSession() as session:

async with session.get(url) as response:

return await response.text()

async def main():

urls = ['https://www.baidu.com', 'https://www.google.com', 'https://www.python.org']

tasks = [asyncio.create_task(fetch(url)) for url in urls]

results = await asyncio.gather(*tasks)

for result in results:

print(len(result))

asyncio.run(main())

This code uses the aiohttp library to make asynchronous HTTP requests. We create multiple Task objects and use the gather method to execute them simultaneously. Finally, we print the length of each response.

See? With async/await syntax, we can write asynchronous programs in a synchronous code style, thus avoiding the drawbacks of traditional nested callback functions. Isn't it cool?

Multi-threading vs Multi-processing

Since we have both threading and multiprocessing modules, which one should we choose? Let's compare:

-

The

threadingmodule is suitable for I/O-intensive tasks, such as network requests, file reading and writing, etc. However, due to the existence of GIL (Global Interpreter Lock), multi-threading may not achieve performance improvements when executing CPU-intensive tasks. -

The

multiprocessingmodule is more suitable for CPU-intensive tasks, as each process has its own independent Python interpreter, unaffected by GIL. However, inter-process communication and context switching costs are relatively high, requiring a trade-off.

Therefore, for I/O-intensive tasks, we can prioritize using the threading module; while for CPU-intensive tasks, the multiprocessing module might be a better choice. Of course, specific analysis based on actual situations is still needed.

Best Practices for Concurrent Programming

Finally, let's summarize some best practices for concurrent programming:

-

Choose the appropriate concurrent model: Select the

threading,multiprocessing, orasynciomodule based on the nature of the task. -

Avoid shared states: Minimize shared states between threads or processes to effectively avoid race conditions.

-

Use thread-safe data structures: If data must be shared, use thread-safe data structures such as

queue.Queue. -

Handle exceptions properly: In concurrent code, be sure to handle exceptions properly to prevent program crashes.

-

Pay attention to resource management: Correctly close thread pools, process pools, and other resources to prevent resource leaks.

By following these best practices, you can write efficient and robust concurrent programs.

Well, that's all for the basics of Python concurrent programming. I believe that through today's sharing, you now have a preliminary understanding of concurrent programming. Now it's up to you to practice and explore! If you have any questions, feel free to ask me anytime. The road of programming is long and arduous, let's forge ahead together on this path!

Previous

Previous