Introduction

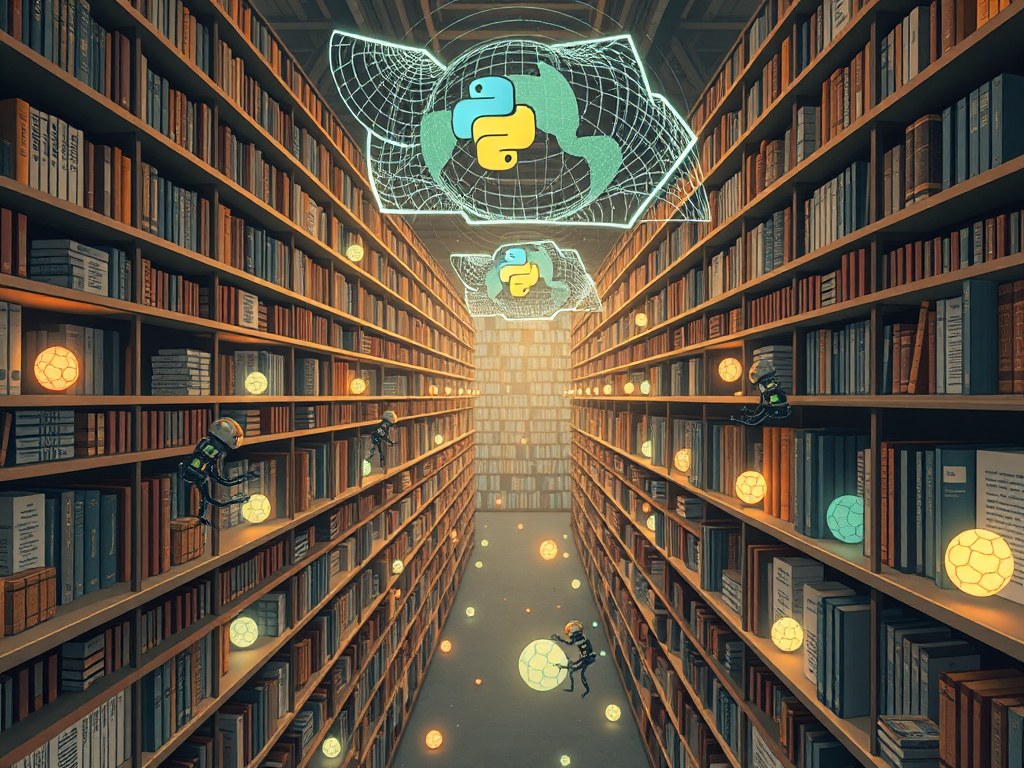

Hello, dear readers! Today we're going to talk about the interesting topic of Python web scraping. In this information age, web data is undoubtedly a valuable resource. Being proficient in web scraping techniques can be very beneficial for our daily work and study. Don't worry, Python provides us with many useful libraries that make web scraping a breeze. Now, let's dive in!

Basics

Let's start with the most basic. To scrape web content, we need two powerful tools: urllib2 and BeautifulSoup.

Getting Started with urllib2

urllib2 can be considered the Swiss Army knife of Python web scraping. It can be used to send requests and get responses. Here's a small example:

import urllib2

response = urllib2.urlopen('http://example.com')

html = response.read()

Simple, right? We just need to pass the URL to the urlopen() function, and we can get the HTML source code of that webpage. However, remember that urlopen() only gets the source code. To further parse the data, we need the help of BeautifulSoup.

Getting Started with BeautifulSoup

BeautifulSoup is a very user-friendly HTML/XML parsing library. Combined with urllib2, we can easily extract the data we need from web pages. Look at this example:

from BeautifulSoup import BeautifulSoup

soup = BeautifulSoup(html)

for row in soup('table', {'class': 'data'}):

tds = row('td')

print tds[0].string, tds[1].string

This code will print the contents of the first and second cells of each row in the table with class 'data' on the webpage. BeautifulSoup's usage looks a lot like jQuery, doesn't it? It allows us to conveniently use CSS selectors to locate elements.

However, note that urllib2 is only suitable for Python 2. If you're using Python 3, it's recommended to use the more modern requests library to send requests.

Hands-on with requests

The requests library is much more concise to use than urllib2, and it also handles exceptional situations more humanely. Here's a small example:

import requests

from bs4 import BeautifulSoup

r = requests.get('http://example.com')

soup = BeautifulSoup(r.text, 'html.parser')

See? With just a few lines of concise code, we can get the HTML source code of the webpage!

Advanced

After mastering the basic operations, let's look at some advanced techniques to help you better scrape data.

Scraping Dynamic Webpages

Some websites' content is dynamically loaded through JavaScript, which can't be scraped using traditional methods. In this case, we need to simulate browser behavior and execute JavaScript code.

from selenium import webdriver

driver = webdriver.Chrome()

driver.get('http://example.com')

html = driver.page_source

This is what Selenium does. It can launch a real browser, execute JavaScript on the webpage, and then we can scrape the dynamically loaded data.

However, Selenium has a drawback: launching a browser can be quite slow. So for scraping static webpages, it's better to use libraries like requests.

Handling Anti-Scraping Measures

Some websites, to prevent scraping, will check the request headers and refuse access if they find the request wasn't sent by a browser. In this case, we need to set the request headers to disguise ourselves as a browser.

headers = {'User-Agent': 'Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:80.0) Gecko/20100101 Firefox/80.0'}

r = requests.get('http://example.com', headers=headers)

This way, the website will think it's a browser accessing and allow our request.

Also, some websites specify which pages can be scraped in their robots.txt file. It's best to check this file before scraping to avoid scraping restricted content.

Data Parsing Section

After scraping the webpage data, we need to parse it. Here I'll share some more tips.

Parsing Local Files

During development, we might want to parse a local HTML file first to check if our code is correct. BeautifulSoup can easily parse local files as well:

with open('local.html') as file:

soup = BeautifulSoup(file, 'html.parser')

# Parse soup object

This way we can debug our code locally, and apply it to actual scraping once the code is perfected.

Extracting Table Data

In webpages, tables are one of the most common forms of data presentation. To extract data from tables, we can do this:

table = soup.select('table')[0] # Get the first table

rows = table.select('tr') # Get all rows

for row in rows:

cols = row.select('td') # Get all columns in the row

values = [col.text for col in cols] # Get the text of each cell

print(values)

Using the select() method, we can accurately locate tables, rows, and cells with CSS selectors. This method can effectively extract structured data from tables.

Conclusion

That's all we'll cover today. There's still a lot to learn about Python web scraping, but if you master the above basic and advanced techniques, you should be able to try scraping interesting web data from many sites.

Finally, please be sure to follow the rules during scraping and use public data reasonably and legally. I wish you happy learning and smooth sailing on your programming journey! Feel free to give me feedback if you have any questions. Remember to practice hands-on, as practice is the fastest way to master skills. Keep it up!

Previous

Previous