Hey, dear Python enthusiasts! Today, we're embarking on an exciting journey to explore the mysteries of Python web scraping. Are you eager to become a data collection wizard? Let's unlock this powerful skill together!

Introduction to Web Scraping

Do you remember how you felt when you first heard about "web scraping"? I was as excited as discovering a new continent! Simply put, web scraping is the process of extracting data from web pages. Imagine instantly acquiring information from thousands of web pages—don’t you feel like a superhero in the data world?

But wait, why choose Python for this task? I can proudly tell you that Python is tailor-made for web scraping! Its simple syntax and rich libraries make it our trusty assistant. I remember when I started learning, just a few lines of code could scrape a webpage—the sense of accomplishment was indescribable!

Toolbox

Speaking of tools, our Python web scraping toolbox is full of treasures. Let me introduce a few heavyweight contenders:

-

Requests: My favorite! Sending HTTP requests is as natural as breathing.

-

BeautifulSoup: Parsing HTML and XML? It's your gourmet chef!

-

Scrapy: Handling complex scraping tasks? Scrapy is your project manager.

-

Selenium: Dealing with dynamic content? It’s your magician!

Every time I use these tools, I feel like I'm operating a supercomputer. How about you, which one is your favorite?

Time for Action

Alright, we've covered enough theory; it's time to showcase our skills! Let's see how to use these powerful tools.

Requests: Your First Request

Do you remember sending your first HTTP request? With the Requests library, it’s a piece of cake:

import requests

response = requests.get('https://www.example.com')

print(response.status_code) # If you see 200, it's successful!

print(response.content) # This is the content we scraped

Seeing the webpage content displayed obediently on your screen—don't you feel like you've conquered the internet?

BeautifulSoup: The HTML Parsing Master

Next, let's parse this webpage with BeautifulSoup:

from bs4 import BeautifulSoup

import requests

response = requests.get('https://www.example.com')

soup = BeautifulSoup(response.content, 'html.parser')

titles = soup.find_all('h1')

for title in titles:

print(title.text)

Every time I see this code in action, I can't help but admire: BeautifulSoup is incredibly smart! It’s like a gentle chef, effortlessly organizing HTML neatly.

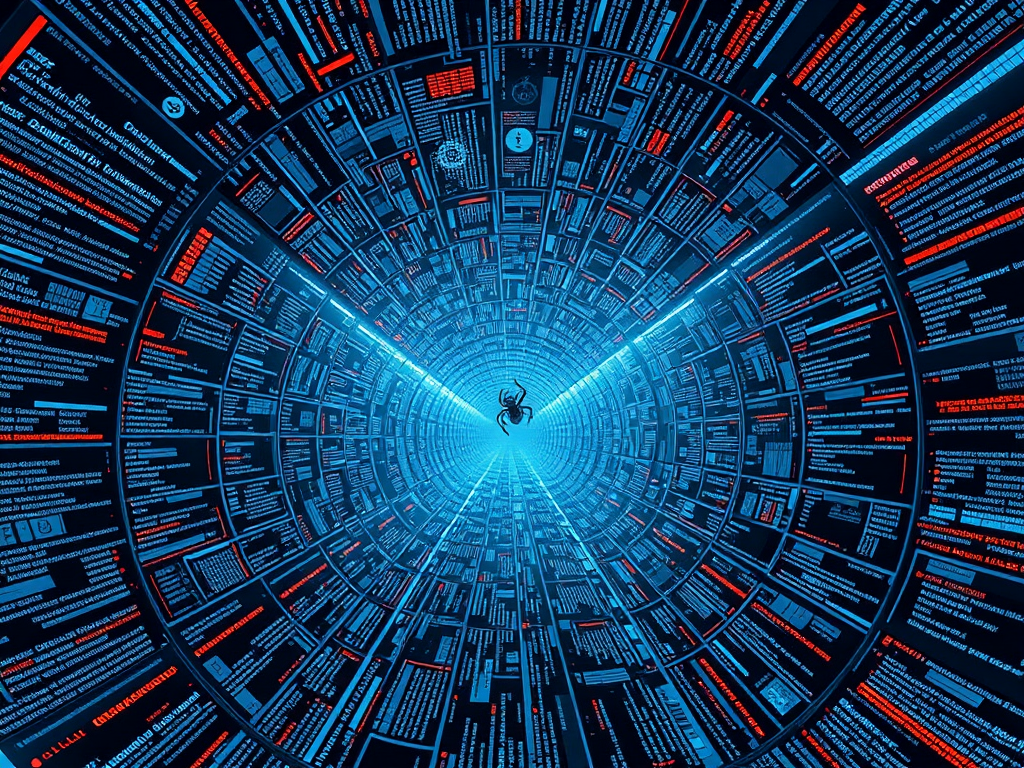

Scrapy: The King of Web Crawling Frameworks

If you want to tackle more complex scraping tasks, Scrapy is undoubtedly your best choice. Check out this simple Scrapy spider:

import scrapy

class ExampleSpider(scrapy.Spider):

name = 'example'

start_urls = ['https://www.example.com']

def parse(self, response):

for title in response.css('h1::text').getall():

yield {'title': title}

The first time I used Scrapy, I was stunned! Not only is it powerful, but its clear structure makes complex scraping tasks so elegant.

Selenium: The Dynamic Content Harvester

For those tricky dynamic loading contents, Selenium is your savior:

from selenium import webdriver

driver = webdriver.Chrome()

driver.get('https://www.example.com')

titles = driver.find_elements_by_tag_name('h1')

for title in titles:

print(title.text)

driver.quit()

I remember the first time I successfully scraped dynamic content; the excitement was better than winning the lottery!

The Art of Data

Scraping data is just the first step; we also need to process it well. Data cleaning and storage are art forms too!

Data Cleaning: Turning the Mundane into Magic

Data cleaning may seem tedious, but it's the key step in turning chaotic raw data into valuable information. Some techniques I often use include:

- Removing unnecessary whitespace

- Unifying date formats

- Handling missing values

- Removing duplicate data

I once spent an entire day cleaning a massive dataset. When all the data finally lined up neatly in front of me, the sense of accomplishment was indescribable!

CSV: The Safe Haven for Data

Storing cleaned data is equally important. CSV format, with its simplicity and universality, is my top choice:

import csv

data = [['Title1', 'Description1'], ['Title2', 'Description2']]

with open('output.csv', mode='w', newline='') as file:

writer = csv.writer(file)

writer.writerows(data)

Every time I see data lying obediently in a CSV file, I feel a sense of "mission accomplished." How about you, do you have similar experiences?

The Moral Compass

While we indulge in the joy of web scraping, don't forget to follow some basic rules. Remember to check a site’s robots.txt file and comply with its terms of use and relevant laws and regulations. I always compare this process to being a guest at someone else's house—maintaining politeness and respect ensures we can continue to enjoy this "data feast."

Once, I almost got into trouble for ignoring a website's robots.txt file. Since then, I always remind myself: technical abilities and ethical responsibilities are equally important.

Conclusion

Dear Python enthusiasts, our web scraping journey is coming to a temporary close. Looking back, do you feel enriched? From sending the first HTTP request to successfully scraping complex dynamic content, and then cleaning and storing data, every step is full of challenges and fun.

Remember, web scraping is not just a technique but an art. It requires continuous learning, practice, and a constant curiosity for new technologies. Do you have any interesting web scraping experiences to share? Or any questions about a specific scraping task? Feel free to leave a comment, let's discuss and progress together!

Finally, I want to say that the world of Python web scraping is vast, and today we’ve only explored the tip of the iceberg. Stay passionate, keep learning, and you'll make great strides in this field. Let’s look forward to the next data adventure together!

Previous

Previous