Hey, Python enthusiasts! Today we're going to talk about a topic that's both interesting and practical - web scraping. Have you ever thought how great it would be to automatically retrieve large amounts of information from web pages? Or are you curious about how those apps that push massive amounts of news to you every day work? Today, let's unveil the mystery of web scraping and embark on a wonderful Python journey together!

First Exploration

Remember the excitement when you first encountered web scraping? It was like discovering a new continent! But before we begin, I want to ask you: do you know what web scraping really is? Simply put, it's automatically extracting the information we need from web pages through programs. Sounds cool, right?

So, how do we start? Don't rush, let's first get to know two powerful tools: BeautifulSoup and Requests.

BeautifulSoup is like an interpreter fluent in HTML, it can help us understand the structure of web pages and find the information we need. Requests, on the other hand, is like a diligent messenger, responsible for helping us obtain the content of web pages.

Take a look at this simple code:

import requests

from bs4 import BeautifulSoup

response = requests.get('https://example.com')

soup = BeautifulSoup(response.text, 'html.parser')

for item in soup.find_all('h2'):

print(item.text)

See, it's that simple! We used Requests to get the web page content, then used BeautifulSoup to parse it, and finally printed all the h2 titles. Don't you feel like you've already become a web scraping expert?

Diving Deeper

Alright, now that we've mastered the basics, let's dive a bit deeper and explore more interesting techniques!

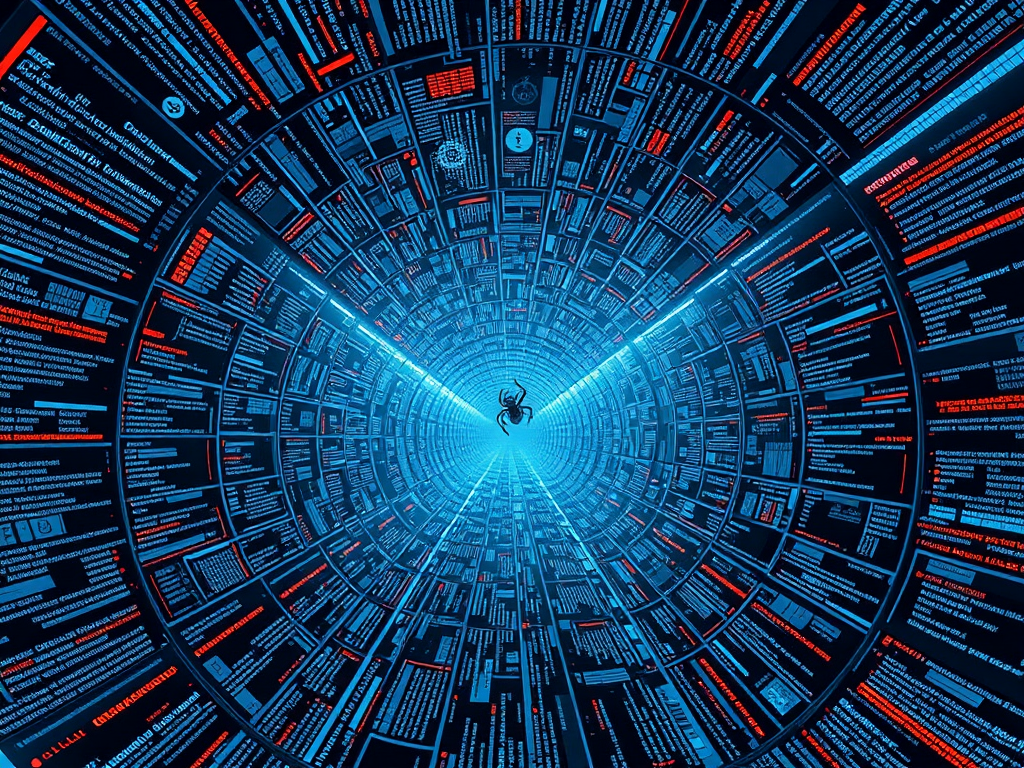

Have you ever encountered a situation where there's clearly content on the web page, but you can't scrape it using the method we just learned? Don't worry, it's likely because that content is dynamically loaded through JavaScript. In this case, we need a more powerful tool - Selenium.

Selenium is like a robot that can browse the web, it can simulate our behavior of using a browser. Let's see how to use it to scrape dynamic content:

from selenium import webdriver

driver = webdriver.Chrome()

driver.get('https://example.com')

content = driver.find_element_by_id('content').text

print(content)

driver.quit()

Isn't it amazing? Selenium opened a real browser, loaded all the JavaScript content, and then we can easily get the information we want.

But what if we need to scrape data from multiple different websites? Do we have to write a separate program for each website? Don't worry, Python always has a way to make things simple. This is where we can use the Scrapy framework.

Scrapy is like a powerful crawler factory, you can customize different crawlers for different websites, and then let them work simultaneously. Imagine, you can scrape the latest headlines from multiple news sites at the same time, isn't that cool?

Challenges

Alright, now we've mastered quite a few techniques, but real web scraping might encounter more challenges. For example, you might encounter dynamic tables with pagination, or various anti-scraping mechanisms.

For paginated dynamic tables, we can solve it by combining Selenium with loops:

while True:

rows = driver.find_elements_by_css_selector('table tr')

for row in rows:

print(row.text)

try:

next_button = driver.find_element_by_id('next')

next_button.click()

except NoSuchElementException:

break

This code will keep turning pages until there's no next page, isn't it clever?

As for anti-scraping mechanisms, we also have countermeasures. For instance, we can set request headers to disguise ourselves as a normal browser:

headers = {'User-Agent': 'Mozilla/5.0'}

response = requests.get('https://example.com', headers=headers)

This way, the website will think we're a normal user, not a scraping program.

Reflection

After saying so much, are you eager to start your own web scraping journey? But before you begin, I'd like to share some personal thoughts with you.

First, although web scraping is powerful, we need to remember to respect the rules of websites. Many websites have robots.txt files that specify what content can be scraped and what can't. As responsible programmers, we should follow these rules.

Second, web scraping may put additional burden on websites. If your program frequently visits a certain website, it might affect other users' normal access. So, we need to learn to control the frequency of scraping, giving websites some time to "breathe".

Lastly, I want to say that web scraping is just a tool, what's important is how we use this tool. You can use it to collect data for research, to monitor changes in certain information, or even to create a new service. The key is to find a truly valuable application scenario.

Looking Ahead

Alright, our web scraping journey comes to a temporary end. But this is just the beginning. As technology develops, web scraping methods are constantly evolving. For example, there are now some AI-based web scraping tools that can automatically identify web page structures and extract useful information.

Personally, I think future web scraping might become more intelligent. Maybe one day, we'll only need to tell the program "I want all the news headlines from this website", and it will automatically complete all the work. Sounds cool, right?

But no matter how technology develops, basic knowledge will always be the most important. Just like the content we learned today, it may seem simple, but it's the foundation for building more complex systems.

So, I hope you can digest the content we learned today well, and then start your own exploration. Maybe you'll discover some interesting techniques that I didn't mention, or you'll think of some innovative ways to apply it. Either way, I look forward to hearing your story!

Do you have any thoughts on web scraping? What do you plan to use it for? Feel free to share your ideas in the comments section, maybe your idea will inspire others!

Alright, that's all for today's sharing. Remember, in the world of programming, learning never ends. Keep your curiosity, be brave to try, and you'll surely have more exciting discoveries. See you next time, we'll continue to explore the mysteries of Python!

Previous

Previous