Hey, dear Python enthusiasts! Today we're going to talk about an interesting and practical topic — Python web scraping. Have you ever wondered how you can capture the information you want from the vast ocean of the internet with just a few lines of code? That's right, Python web scraping is such a magical key that can open the door to the treasure trove of data for you. Let's explore this fascinating world together!

First Glimpse into the Mystery

First, you might ask: What is web scraping? Simply put, it allows our Python program to act like a diligent little bee, flying around the garden of web pages and collecting the "nectar" (data) we need. Sounds cool, right?

Imagine you're a market analyst who needs to collect a large number of product reviews. Manually copying and pasting? That's too time-consuming and labor-intensive! With web scraping, you can have Python automatically complete this task for you, saving a lot of time. Or you're a researcher who needs to gather the latest tech updates from multiple news sites. Similarly, Python web scraping can be your trusty assistant.

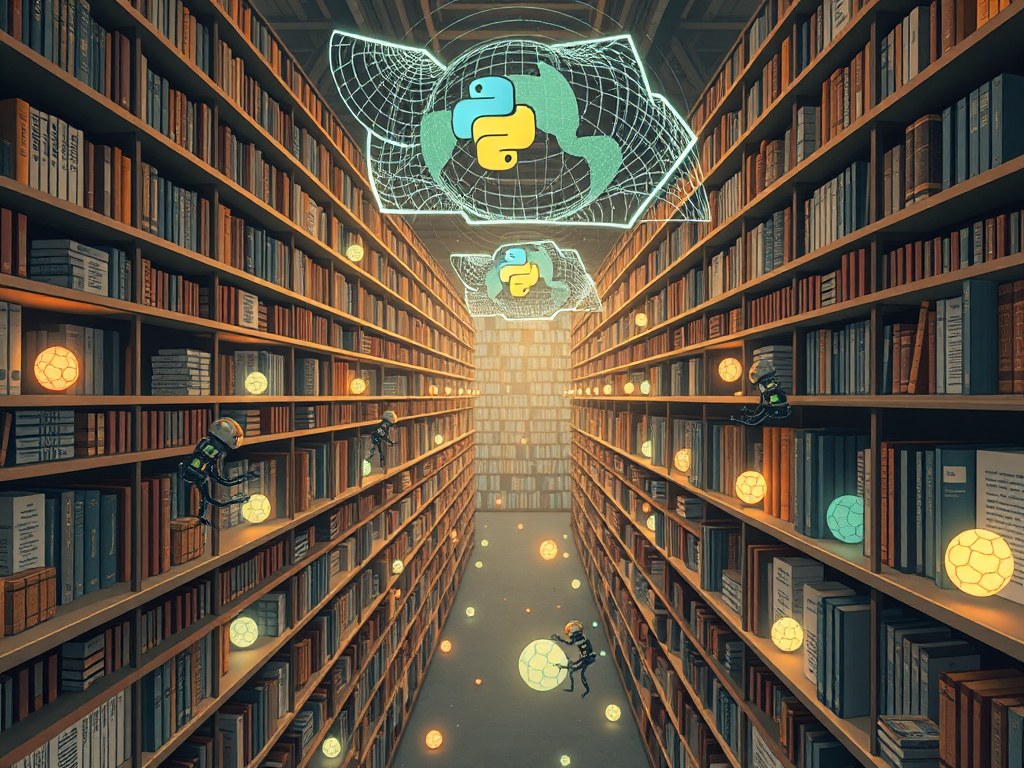

Toolbox Unveiled

When it comes to web scraping, we must mention a few powerful Python libraries. They're like our magical toolbox, each tool with its unique use.

Requests: The Door to the Web World

The Requests library is like the key to opening web pages. It helps us send HTTP requests and retrieve the content of web pages. It's simple and straightforward to use; a few lines of code can get it done:

import requests

url = 'https://www.example.com'

response = requests.get(url)

print(response.text)

See, it's that simple! We tell Python: "Hey, go to this URL and bring back the content." Then, swoosh, the content is in hand.

BeautifulSoup: The Magic Wand for Data Extraction

Once we get the webpage content, the next step is to extract the information we need from it. This is where BeautifulSoup comes in. It's like an intelligent parser that helps us easily extract data from HTML or XML.

from bs4 import BeautifulSoup

soup = BeautifulSoup(response.content, 'html.parser')

title = soup.find('h1').text

print(f"Page title is: {title}")

The strength of BeautifulSoup lies in its ability to let us point and extract the elements we want as if using a magic wand. No need for complex regular expressions or worrying about changes in the HTML structure, BeautifulSoup can handle it all.

Scrapy: The Swiss Army Knife of Web Crawling

If Requests and BeautifulSoup are entry-level tools, then Scrapy is the professional all-rounder. It can not only scrape data but also handle complex crawling logic, supporting large-scale data collection.

With Scrapy, you can easily create a web scraping project:

import scrapy

class MySpider(scrapy.Spider):

name = 'example'

start_urls = ['https://www.example.com']

def parse(self, response):

yield {'title': response.css('h1::text').get()}

This simple crawler can scrape the title of a webpage. The power of Scrapy lies in its complete framework, including data extraction, data processing, and even distributed crawling capabilities.

Selenium: The Conqueror of Dynamic Pages

In the world of web scraping, Selenium is like an all-rounder. It can handle not only static pages but also those dynamic pages full of JavaScript. Imagine you need to scrape a site that requires login or a page with dynamically loaded content; Selenium is your best choice.

from selenium import webdriver

driver = webdriver.Chrome()

driver.get('https://www.example.com')

driver.implicitly_wait(10)

button = driver.find_element_by_id('load-more')

button.click()

content = driver.find_element_by_class_name('dynamic-content').text

print(content)

driver.quit()

With Selenium, you can simulate real user actions like clicking buttons, filling forms, etc. This makes it the ideal tool for handling complex web pages.

Practical Exercise

Alright, we've covered the theoretical knowledge. Now, let's look at a real example to see how to use these tools to complete an actual web scraping task.

Suppose we want to scrape the latest book reviews from a fictional book review site "BookLovers.com." The site's structure is as follows: - The homepage lists the latest 10 books. - Each book has a detail page containing the title, author, rating, and review.

Our goal is to scrape the information of these 10 books and save it to a CSV file. Let's achieve this step by step:

import requests

from bs4 import BeautifulSoup

import csv

def get_book_info(url):

response = requests.get(url)

soup = BeautifulSoup(response.content, 'html.parser')

title = soup.find('h1', class_='book-title').text.strip()

author = soup.find('span', class_='author').text.strip()

rating = soup.find('div', class_='rating').text.strip()

review = soup.find('div', class_='review').text.strip()

return {

'title': title,

'author': author,

'rating': rating,

'review': review

}

def main():

base_url = 'https://www.booklovers.com'

homepage = requests.get(base_url)

soup = BeautifulSoup(homepage.content, 'html.parser')

book_links = soup.find_all('a', class_='book-link')

books = []

for link in book_links[:10]: # Only take the first 10 books

book_url = base_url + link['href']

book_info = get_book_info(book_url)

books.append(book_info)

# Save to CSV file

with open('latest_books.csv', 'w', newline='', encoding='utf-8') as file:

writer = csv.DictWriter(file, fieldnames=['title', 'author', 'rating', 'review'])

writer.writeheader()

for book in books:

writer.writerow(book)

print("Data successfully saved to latest_books.csv!")

if __name__ == '__main__':

main()

This code does the following:

1. First, we define a get_book_info function to extract information from each book's detail page.

2. In the main function, we first access the homepage, finding all book links.

3. Then, we iterate over these links (limited to the first 10), calling the get_book_info function for each book.

4. Finally, we save the collected information to a CSV file.

See, it's that simple! Through this example, you can see how to use Requests and BeautifulSoup together to achieve a practical web scraping task.

Important Considerations

While enjoying the convenience of web scraping, we must also pay attention to some important considerations:

-

Respect the site's rules: Many sites have a robots.txt file indicating what content can and cannot be scraped. We should adhere to these rules.

-

Control request frequency: Don't be too greedy; sending a large number of requests in a short time may get you banned. You can use the

time.sleep()function to add delays between requests. -

Exception handling: The web environment is complex, and our code should be able to gracefully handle various possible exceptions.

-

Data cleaning: Data scraped from web pages often requires further processing before use, such as removing extra whitespace, converting data types, etc.

-

Regular maintenance: The structure of websites may change, so we need to regularly check and update our scraping code.

Conclusion

Python web scraping is a powerful and fun skill. Through this introduction, you now understand its basic concepts, common tools, and how to implement a simple scraping task. But this is just the beginning; there are many interesting techniques in the world of web scraping waiting for you to explore.

Remember, technology is neutral; the key is how we use it. When performing web scraping, always adhere to legal and ethical guidelines, respecting others' work and privacy.

So, are you ready to start your Python web scraping journey? Trust me, it's going to be a journey full of surprises and rewards. Let's dive into the ocean of data together and discover more interesting treasures!

Do you have any thoughts or questions? Feel free to share them in the comments, and let's discuss, learn, and grow together!

Previous

Previous